Supercharge Your Prompts, Supercharge Your AI

Want to get the most out of AI? Prompt engineering is key. This listicle provides seven practical prompt engineering tips to help you generate better content, streamline your workflow, and unlock the full potential of AI tools. Mastering these techniques, from specifying clear instructions to leveraging iterative refinement, is crucial for developers, data scientists, and technical project managers working with AI models. Learn how to effectively communicate with AI and achieve superior results.

1. Be Specific and Clear in Your Instructions

The cornerstone of effective prompt engineering is providing clear, specific, and unambiguous instructions to AI models. This fundamental principle dictates that the more precise and detailed your prompts are, the more accurate, relevant, and useful the AI-generated output will be. Vague or overly broad prompts often lead to generic responses that require extensive revisions, wasting valuable time and resources. This technique involves explicitly stating what you want the AI to produce, the desired format, and any specific constraints or requirements that should be considered. By eliminating ambiguity, you guide the AI towards the desired outcome, maximizing its potential and achieving your goals efficiently. This is especially crucial for technical audiences who require precise and reliable outputs for their projects.

This approach is crucial for several reasons. Firstly, it significantly reduces the need for multiple iterations and back-and-forth corrections. When the AI understands your requirements from the outset, it can generate a satisfactory output on the first try. Secondly, it enables consistent output quality. By providing detailed instructions, you establish a clear framework for the AI, ensuring that the generated content adheres to your specifications consistently across different prompts and sessions. This predictability is essential for maintaining quality and streamlining workflows. Finally, clear instructions promote versatility, allowing your prompts to work effectively across different AI models and platforms. You can confidently apply these prompt engineering principles across a range of tools, maximizing your efficiency and productivity.

For software developers, AI/ML practitioners, DevOps engineers, data scientists, and technical project managers, specificity in prompts translates to greater control over the AI's output and its integration into various projects. Whether you are generating code, documenting processes, creating test cases, or analyzing data, clear instructions ensure the AI aligns with your technical requirements. Imagine generating boilerplate code for a specific framework—a precisely worded prompt can provide ready-to-use snippets, saving you significant development time.

Let's illustrate the difference between a vague prompt and a specific one. Instead of asking the AI to "Write about marketing," a specific prompt would be: "Write a 500-word blog post about email marketing strategies for small e-commerce businesses, including 3 specific tactics and expected ROI." Similarly, instead of requesting "Help with my resume," a specific prompt would be: "Review my software engineer resume and provide 5 specific improvements for ATS optimization, focusing on technical skills and quantifiable achievements."

To craft highly effective prompts, consider the following actionable tips:

- Use numbers and metrics: Whenever possible, specify quantitative details like word count, number of points, percentages, or specific dimensions. This provides clear boundaries and ensures the output meets your length and scope requirements. For example, "Generate three Python functions, each less than 50 lines of code, to perform..."

- Specify the target audience: Clearly define who the output is intended for. This helps the AI tailor the language, tone, and complexity accordingly. For instance, "Explain Kubernetes networking to a non-technical audience."

- Include desired format: Indicate whether you want the output in bullet points, paragraphs, tables, code snippets, JSON, or any other specific format. For example, "Provide the results in a JSON array with keys for 'name,' 'value,' and 'timestamp.'"

- State the purpose or goal of the output: Clearly articulate why you need this information and how it will be used. For instance, "Create a Python script to automate the deployment of a Docker container to AWS, aiming to reduce deployment time by 20%."

- Provide context about constraints or limitations: If there are specific limitations, such as platform compatibility, coding style guidelines, or budget restrictions, explicitly mention them in the prompt. For example, "Design a REST API endpoint in Java Spring Boot that conforms to the OpenAPI specification provided..."

While being specific offers significant advantages like improved response accuracy, time savings, and actionable outputs, it also requires more upfront thinking and planning. Crafting detailed prompts can sometimes be more time-consuming initially, and it might limit the AI's ability to produce unexpectedly creative responses. Furthermore, detailed prompting may require more domain-specific knowledge to articulate specific needs effectively. However, the advantages of clarity and control generally outweigh these limitations, particularly in technical contexts where precision is paramount. By mastering the art of specific prompt engineering, you can unlock the full potential of AI and integrate it seamlessly into your workflow.

2. Use Role-Based Prompting

Role-based prompting is a powerful prompt engineering technique that significantly enhances the quality and relevance of AI-generated responses. It involves instructing the AI to assume the persona or expertise of a specific professional, expert, or character. By assigning a role, you leverage the model's vast training data to access the language, knowledge, and perspective associated with that role. This leads to more targeted, authoritative, and contextually appropriate outputs, making your interactions with the AI more productive and efficient. This technique is valuable for a range of applications, from content creation and code generation to problem-solving and research. Its versatility and effectiveness make it a crucial tool in any prompt engineer's arsenal, ensuring you get the most out of your interactions with AI models.

This method works by activating the relevant sections of the AI model's training data. When you specify a role, the model attempts to emulate the communication style, knowledge base, and thought processes associated with that persona. For instance, if you ask the AI to "act as a senior software engineer," it will draw upon its training data related to software engineering principles, best practices, and common terminology. This allows the AI to generate responses that are more informed, professional, and relevant to the given context. This focused approach not only improves the accuracy and depth of the output but also reduces the need for lengthy background explanations in your prompts, saving you time and effort.

Consider the following examples to understand the practical application and impact of role-based prompting:

- Finance: "Act as a senior financial advisor and explain the pros and cons of index fund investing for a 30-year-old beginner." This prompt elicits a response tailored to a specific audience (beginner investor) from a trusted source (senior financial advisor), providing clear, actionable information.

- Healthcare: "You are a pediatrician. Provide guidance for parents on managing their toddler's sleep regression." This prompt frames the query within the context of pediatric expertise, ensuring the response is appropriate for concerned parents seeking reliable advice.

- Cybersecurity: "As a cybersecurity expert, outline the top 5 threats facing small businesses in 2024." This prompt leverages the AI's knowledge of cybersecurity trends and best practices to provide a concise and informative overview relevant to a specific target audience (small businesses).

- Software Development: "As a senior Python developer, write a function that efficiently sorts a list of dictionaries based on a specified key." This prompt leverages specific coding knowledge within the AI model to generate functional code, demonstrating the practical application of role-based prompting for technical tasks.

Role-based prompting offers numerous advantages. It produces more professional and knowledgeable responses, automatically adjusting the complexity level appropriate to the assigned role. It reduces the need for extensive background explanations, streamlining the prompting process. Furthermore, it creates a consistent voice and perspective, enhancing the overall coherence and credibility of the AI-generated output.

However, there are also potential drawbacks to consider. Relying on stereotypical role expectations can introduce biases into the responses. Restricting the AI to a specific role may limit creative or interdisciplinary thinking. Furthermore, the technique might not be effective for novel or rapidly evolving fields where the training data is limited. Finally, there's a risk of the AI exhibiting overconfidence in its responses, potentially providing information beyond its actual knowledge base within the assigned role.

To effectively leverage role-based prompting, keep these tips in mind:

- Data Alignment: Choose roles that align with the AI model's available training data for the best results.

- Specificity: Combine roles with specific expertise levels (e.g., senior, junior, specialized) for more targeted responses.

- Contextualization: Include relevant context about the role's typical responsibilities and the situation at hand.

- Terminology: Use industry-specific language and terminology appropriate to the chosen role.

- Credentials: Consider adding credentials or experience level to further define the role (e.g., "a board-certified cardiologist").

Role-based prompting has been popularized through the OpenAI ChatGPT community, documentation for models like Anthropic Claude, and ongoing research by prompt engineering experts. By understanding this technique and its nuances, developers, data scientists, and other technical professionals can leverage the full potential of AI models for a wide range of tasks, making prompt engineering a more precise and powerful tool.

3. Implement Few-Shot Learning Examples

Few-shot learning is a powerful prompt engineering tip that significantly enhances the quality and consistency of outputs generated by large language models (LLMs). Instead of relying solely on explicit instructions, few-shot learning involves providing the model with a small set of examples demonstrating the desired input-output format. This technique helps the LLM understand the underlying patterns, style, and structure you expect, ultimately leading to more accurate and relevant responses tailored to your specific requirements. This approach bridges the gap between general LLM training and the specific nuances of your task, making it an invaluable tool in any prompt engineer's arsenal.

The core principle behind few-shot learning is mimicking the way humans learn through examples. Just as a child learns to identify objects by seeing a few labeled pictures, an LLM can learn to perform specific tasks by observing a few input-output pairs. This approach reduces the ambiguity often associated with purely descriptive instructions, allowing the model to grasp complex or nuanced tasks more effectively. By demonstrating the desired format and style upfront, you essentially guide the LLM towards the desired outcome, minimizing the need for extensive rewriting and revisions.

Few-shot learning offers numerous benefits, including dramatically improved output consistency, reducing the need for highly detailed written instructions, and effectively handling complex formatting requirements. It excels in tasks requiring a specific tone or style, such as creative writing or crafting tailored marketing copy. Furthermore, this technique allows for rapid adaptation to specific use cases by simply changing the examples, making it a highly flexible and adaptable prompt engineering strategy.

Consider the task of classifying emails. Instead of explaining the criteria for each category, you can provide a few examples: "Classify emails as Urgent/Normal/Spam. Example 1: 'Meeting moved to 3pm' = Normal. Example 2: 'Server down, need immediate help' = Urgent. Example 3: 'Congratulations! You won a free cruise!' = Spam. Now classify: 'Your account will be closed today'". This approach clearly demonstrates the desired output and enables the LLM to accurately categorize new emails based on the provided examples. Another example lies in generating product descriptions. By showcasing 2-3 examples of product descriptions adhering to your brand's specific tone and style, you can effectively guide the LLM to generate new descriptions that seamlessly align with your brand identity.

While few-shot learning is a powerful tool, it’s essential to be aware of its potential drawbacks. Crafting high-quality examples can be time-consuming, particularly for complex tasks. Furthermore, relying heavily on examples can potentially limit the model's creativity by constraining its responses to the provided patterns. Poorly chosen or unrepresentative examples can mislead the model and produce inaccurate results. Finally, including multiple examples inevitably increases prompt length and token usage, which might have cost implications depending on the LLM provider.

To maximize the effectiveness of few-shot learning, consider the following tips: Use between two and five examples for optimal results, striking a balance between providing sufficient guidance and managing prompt length. Ensure your examples represent different variations of the task to cover a broader spectrum of potential inputs. Focus on creating high-quality, representative examples that accurately reflect the desired output format and style. Maintain consistent formatting across all examples to avoid confusion. Finally, incorporate edge cases or unusual scenarios into your examples to enhance the model’s ability to handle diverse inputs.

Few-shot learning deserves its place amongst essential prompt engineering tips due to its ability to significantly improve output quality and consistency. By demonstrating rather than describing, it streamlines the communication process between the user and the LLM, leading to more efficient and accurate results, especially for tasks requiring specific formatting, style, or nuanced understanding. By understanding the benefits, limitations, and best practices of few-shot learning, you can harness its power to unlock the full potential of LLMs and generate highly tailored outputs for a wide range of applications.

4. Break Complex Tasks into Steps (Chain-of-Thought)

Chain-of-thought (CoT) prompting is a powerful technique in prompt engineering that significantly improves the performance of large language models (LLMs) on complex reasoning tasks. Instead of asking the AI for a direct answer, CoT prompting encourages the model to break down the problem into smaller, more manageable steps, revealing its reasoning process along the way. This systematic approach allows the LLM to tackle intricate problems with greater accuracy and transparency, making it an invaluable tool for software developers, AI/ML practitioners, data scientists, DevOps engineers, and technical project managers alike.

This method works by guiding the LLM through a logical sequence of thought, mimicking how humans approach problem-solving. By explicitly requesting the model to “show its work,” we gain valuable insight into its decision-making process. This transparency not only helps verify the accuracy of the final output but also allows us to identify and correct errors in the reasoning chain. Instead of a "black box" approach where the AI's internal workings are hidden, CoT provides a clear window into the model's thought process, fostering trust and understanding. Learn more about Break Complex Tasks into Steps (Chain-of-Thought)

For instance, instead of simply asking "What's the ROI of this marketing campaign?", a CoT prompt would be structured as follows: "Calculate the ROI of this marketing campaign. First, identify all costs associated with the campaign (advertising, salaries, etc.). Second, calculate the total revenue generated by the campaign. Third, apply the ROI formula (Net Profit / Cost of Investment x 100). Show your work for each step." This structured approach forces the model to systematically consider each element of the problem, leading to a more accurate and reliable result. Another example could be analyzing a business problem: "Analyze this business problem step by step: 1) Identify the core issues, 2) List potential solutions, 3) Evaluate the pros and cons of each solution, 4) Recommend the best approach and justify your choice."

The benefits of using CoT prompting are numerous. It significantly boosts accuracy on complex reasoning tasks, provides insight into the model's thought process, making it easier to debug incorrect responses, and builds trust through a transparent methodology. Moreover, by observing the AI's reasoning, we can identify learning opportunities and refine our own problem-solving strategies. However, CoT also has some drawbacks. The detailed responses consume more tokens, resulting in potentially higher costs and slower processing times. For simple tasks, CoT can be overkill, adding unnecessary complexity. Furthermore, even with seemingly logical steps, the reasoning might still contain errors, requiring careful review.

Here are some actionable tips for effectively using Chain-of-Thought prompting:

- Use guiding phrases: Incorporate phrases like "step by step," "think through this," "show your work," "justify your reasoning," and "explain your logic."

- Explicit reasoning: Always ask for explicit reasoning before the final answer. This helps understand the model's path to the conclusion.

- Numbered steps: Numbering the steps encourages a systematic approach and makes the response easier to follow.

- Domain expertise: Combine CoT with specific domain expertise within the prompt for more accurate and relevant results.

- Review and validate: Carefully review the reasoning steps for logical consistency and factual accuracy, even if they appear sound at first glance.

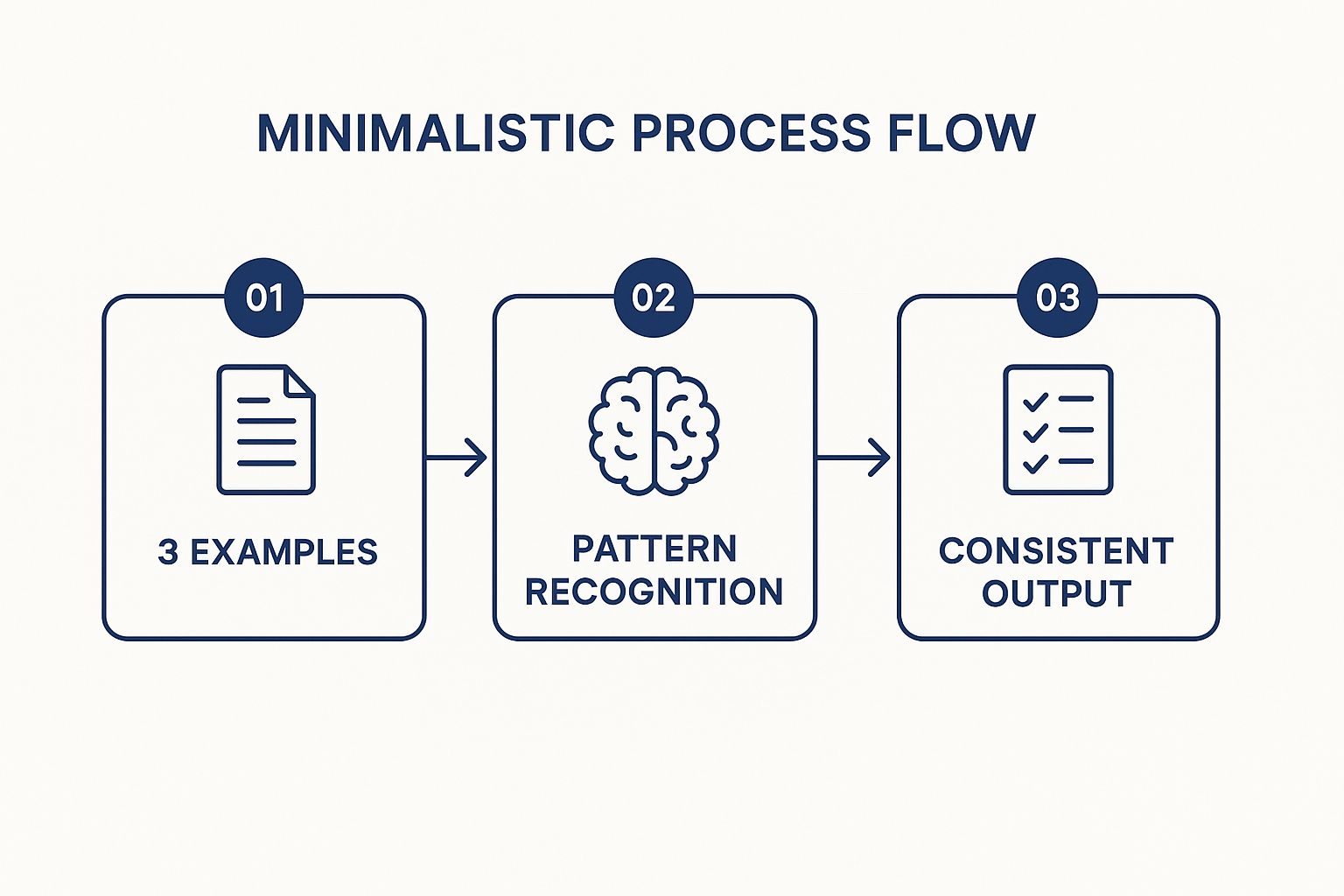

The following infographic illustrates how consistent outputs are achieved through CoT prompting by first providing multiple examples and then enabling the AI to recognize patterns.

The infographic visualizes the process of achieving consistent outputs with CoT prompting. By initially providing multiple examples (Step 1), the AI is guided to recognize patterns in reasoning and problem-solving (Step 2). This pattern recognition then leads to more consistent and predictable outputs (Step 3), enhancing the reliability and effectiveness of the LLM.

Chain-of-Thought prompting has been popularized by leading research organizations including Google Research (Wei et al., 2022) and the OpenAI research team, and is rapidly gaining traction within the broader academic prompt engineering community. Its ability to unlock the reasoning capabilities of LLMs makes it a crucial technique for anyone seeking to leverage the full potential of AI for complex problem-solving.

5. Set Context and Constraints

Effective prompt engineering is more than just asking a question; it's about crafting a detailed request that guides the AI towards the desired output. A critical aspect of this is setting context and constraints, a technique that significantly improves the relevance, appropriateness, and consistency of AI-generated content. This involves providing the AI with background information and explicit limitations to frame its response, ensuring it aligns with specific situations, target audiences, and project requirements. Mastering this technique is essential for anyone looking to leverage the power of AI for content creation, code generation, or problem-solving. This makes it a crucial inclusion in any list of prompt engineering tips.

Context setting involves providing the AI with the necessary background information to understand the specific situation and desired outcome. Think of it as briefing the AI before assigning a task. This background might include details about your company, your target audience, the purpose of the content, and any relevant industry information. Constraints, on the other hand, set boundaries for the AI's response. These can be limitations on length, format, tone, style, or even specific content to avoid. Together, context and constraints act as guardrails, guiding the AI towards a more focused and useful output.

Here's how context and constraints work together: Imagine you're asking an AI to write a marketing email. Simply asking it to "write a marketing email" will likely result in a generic, uninspired output. However, if you provide context like, "Our company is a B2B SaaS startup targeting small businesses. We value transparency and practical solutions," and add constraints such as, "Keep the email under 200 words and avoid technical jargon," you drastically increase the chances of receiving a relevant and effective email. The AI now understands the brand voice, the target audience, and the desired length, allowing it to generate a more targeted and impactful message.

Examples of Effective Context and Constraint Setting:

- Context: "We're developing a Python script for automated data analysis within a financial institution. Security and data integrity are paramount." Constraint: "Provide code with detailed comments explaining each function and ensure it adheres to industry best practices for data security."

- Context: "Writing a blog post for software developers about the latest advancements in machine learning for natural language processing." Constraint: "Use a technical tone, provide code examples in Python, and keep the post between 800-1000 words."

- Context: "Creating a chatbot for a customer support team handling inquiries about online orders." Constraint: "Maintain a friendly and helpful tone, provide clear and concise answers, and offer options for escalating complex issues to a human agent."

Tips for Setting Context and Constraints:

- Be Specific with Company/Brand Information: Include details about your brand voice, values, and target audience.

- Define Target Audience Demographics and Needs: The more you tell the AI about your audience, the better it can tailor its response.

- Establish Clear Boundaries: Specify the desired tone, length, format, and content type. This is crucial for maintaining consistency and preventing off-topic responses.

- Provide Industry or Domain-Specific Context: This helps the AI understand the nuances of your field and generate more relevant content.

- Include Legal, Ethical, or Brand Constraints: Specify any restrictions on language, content, or topics to avoid legal issues or brand damage.

- Update Context Regularly: As your business evolves or your needs change, remember to update the context you provide to the AI.

Pros and Cons of Setting Context and Constraints:

Pros:

- More Relevant and Targeted Responses: Context helps the AI understand your specific needs.

- Reduced Risk of Inappropriate Content: Constraints prevent the AI from generating off-brand or harmful content.

- Improved Consistency Across Multiple Requests: Consistent context ensures consistent outputs.

- Enhanced Control Over Output: You gain greater control over the style, tone, and content of the AI-generated output.

Cons:

- Requires Deep Understanding of Context Needs: You need to carefully consider the necessary background information.

- Potential Over-Constraining: Too many constraints can stifle creativity and limit the AI's potential.

- Needs Regular Updating: Contexts can change, requiring you to update your prompts accordingly.

By mastering the art of setting context and constraints, you can unlock the true potential of AI and ensure it consistently generates high-quality, relevant, and appropriate outputs tailored to your specific needs. This crucial prompt engineering technique empowers you to steer the AI in the right direction, transforming it from a general-purpose tool into a powerful, customized solution.

6. Use Iterative Refinement

Iterative refinement is a crucial prompt engineering tip that deserves a prominent place in any best practices list. It’s the secret sauce that transforms good prompts into great ones, ultimately leading to more accurate, relevant, and valuable outputs from your AI models. This technique emphasizes a continuous improvement methodology, treating prompt engineering not as a one-time task, but as an ongoing optimization process. Instead of aiming for perfection on the first attempt, you systematically test, evaluate, and adjust your prompts based on real-world results. This cyclical process allows you to fine-tune your prompts over time, ensuring they are highly effective for your specific needs.

For software developers, AI/ML practitioners, DevOps engineers, data scientists, and technical project managers alike, understanding and applying iterative refinement can significantly enhance their interactions with AI models. This approach is especially relevant in today's rapidly evolving technological landscape, where AI models and their capabilities are constantly being updated.

So how does it work in practice? Iterative refinement involves a series of steps:

-

Initial Prompt Creation: Start with a clear, concise prompt that reflects your desired output. Don’t worry about making it perfect at this stage.

-

Testing and Evaluation: Test your prompt with a variety of inputs and evaluate the quality of the generated output. Consider using diverse test cases to ensure your prompt performs well across different scenarios. Define clear metrics for evaluating the output, such as accuracy, relevance, fluency, and creativity.

-

Analysis and Adjustment: Analyze the results of your testing. Identify any shortcomings or areas for improvement. Based on this analysis, adjust your prompt by modifying keywords, adding constraints, or restructuring the phrasing.

-

Repeat: Repeat steps 2 and 3 until you achieve your desired level of performance. This continuous feedback loop is the heart of iterative refinement.

This approach offers several key features that contribute to its effectiveness: continuous improvement, systematic testing and evaluation, adaptation based on real-world results, and the opportunity to implement version control for prompt development, much like traditional software development. Learn more about Use Iterative Refinement This link highlights the parallels between software development automation and the iterative nature of prompt engineering. Just as code requires continuous testing and refinement, so too do the prompts that drive AI models.

Examples of Successful Implementation:

-

Customer Service Chatbot: Imagine developing a chatbot for customer support. You might start with basic prompts and then test them with real customer interactions. By analyzing customer satisfaction scores and identifying common issues, you can iteratively refine the prompts to provide more helpful and accurate responses. Perhaps you discover customers frequently ask about return policies. Adjusting the prompt to specifically address this topic can improve user experience.

-

Content Generation: Suppose you're using an AI model to generate marketing copy. Begin with simple prompts and analyze the quality of the output. Are engagement metrics low? Is the content missing key information? Iteratively adjust the prompt based on feedback and metrics to improve the clarity, persuasiveness, and overall effectiveness of the generated content.

Tips for Effective Iterative Refinement:

- Keep detailed logs: Track the different versions of your prompts and their corresponding performance metrics. This allows you to understand the impact of your changes and revert to previous versions if necessary.

- Diverse testing: Test your prompts with a wide range of inputs and scenarios to ensure robustness and identify potential weaknesses.

- Clear success metrics: Establish clear metrics for evaluating prompt performance before you start the iterative process. This provides a benchmark for measuring progress.

- Small, measurable changes: Make incremental changes between iterations. This allows you to isolate the impact of each modification and avoid introducing new problems.

- Regular feedback: Gather feedback from end users regularly to gain valuable insights into how your prompts are performing in real-world scenarios.

- A/B testing: When possible, use A/B testing to compare the performance of different prompt versions.

Pros and Cons of Iterative Refinement:

Pros:

- Significantly better long-term results

- Adapts to changing requirements and contexts

- Builds institutional knowledge about effective prompting

- Reduces risk of major failures through gradual improvement

- Enables optimization for specific use cases

Cons:

- Requires time and resource investment

- Can be slow to show initial results

- Needs systematic tracking and documentation

- May lead to over-optimization for specific cases

While iterative refinement requires an investment of time and resources, the benefits far outweigh the costs. By adopting this approach, you can unlock the full potential of AI models and ensure that they deliver consistently high-quality results that meet your specific needs, ultimately making this prompt engineering tip essential for any serious practitioner.

7. Leverage Output Formatting and Structure

One of the most powerful prompt engineering tips you can employ is leveraging output formatting and structure. This technique allows you to dictate precisely how the AI should present its response, ensuring consistency, readability, and usability, regardless of the complexity of the query. By providing explicit templates and formatting instructions within your prompts, you can shape the AI's output to perfectly match your specific needs and downstream applications. This is crucial for software developers, AI/ML practitioners, DevOps engineers, data scientists, and technical project managers seeking to integrate AI-generated content seamlessly into their workflows.

Instead of receiving a block of unstructured text, you can instruct the AI to deliver the information in a structured format like JSON, a markdown table, or a numbered list. This structured output isn't just aesthetically pleasing; it unlocks a world of possibilities for automating the processing and integration of AI-generated content. Imagine querying an AI about potential project risks and receiving the output directly as a JSON object ready to be ingested by your project management software. That's the power of output formatting.

This approach is particularly beneficial when dealing with complex data or when consistency across multiple AI responses is critical. For example, if you’re comparing different cloud providers, you could instruct the AI to present its analysis in a table format with consistent columns for pricing, features, and performance metrics. This consistent structure makes comparing the options significantly easier and eliminates the need for manual reformatting.

Here's how output formatting and structure enhance your prompt engineering workflow:

- Ensures Consistent Output Structure: Formatting instructions enforce uniformity across all AI-generated responses, making them predictable and easier to handle programmatically.

- Improves Readability and Usability: Structured data is inherently more organized and easier to understand, particularly when dealing with large volumes of information.

- Enables Automated Processing of Responses: Structured outputs, such as JSON or XML, can be directly parsed and integrated into other systems without manual intervention.

- Supports Integration with Other Systems and Workflows: Formatted output simplifies the integration of AI-generated content into existing workflows, databases, and reporting tools.

Let’s look at some practical examples:

-

JSON format: "Analyze the market trends for electric vehicles and return the analysis as JSON with keys:

summary,growth_drivers,challenges,market_size, andforecast." This prompt instructs the AI to deliver its analysis in a machine-readable JSON format, ready for immediate integration into your data analysis pipeline. -

Table format: "Compare the performance of Python and Java for web development. Present the comparison in a markdown table with columns:

Feature,Python,Java, andAdvantages." This structured output facilitates easy comparison and eliminates the need for manual reformatting. -

Structured list: "Identify the key challenges in implementing DevOps. Format as:

## Problem,### Root Causes (bullet points),### Solutions (numbered list),### Next Steps." This structured approach ensures a clear and organized output, ideal for presentations or reports.

While the benefits are substantial, it’s important to acknowledge the potential drawbacks:

- Can be rigid and limit natural expression: Strict formatting requirements might hinder the AI's ability to express nuances in natural language.

- May not work well for all types of content: Highly creative tasks, like story generation, might not benefit from rigid formatting.

- Requires understanding of output requirements upfront: You need to clearly define your formatting needs before crafting the prompt.

- Can make prompts more complex and longer: Detailed formatting instructions can add to the overall length and complexity of your prompts.

Here are some actionable tips for effectively using output formatting:

- Specify exact formatting syntax: Clearly indicate the desired format (JSON, XML, Markdown, etc.) and adhere to its syntax.

- Provide template examples for complex structures: For intricate formats, provide examples to guide the AI's response.

- Use consistent field names and data types: This ensures data integrity and simplifies downstream processing.

- Consider downstream processing requirements: Tailor the output format to the needs of the systems that will consume it.

- Test formatting with edge cases and varying content lengths: Ensure the formatting holds up under different scenarios.

- Include validation rules when necessary: For critical applications, incorporate validation rules to ensure data quality.

Leveraging output formatting and structure is a powerful technique that elevates your prompt engineering practice, allowing you to extract maximum value from AI interactions. By incorporating these tips, you can streamline your workflows, automate processes, and gain deeper insights from the data generated by AI. Learn more about Leverage Output Formatting and Structure for best practices related to code structuring, a concept closely related to structuring AI outputs. Properly structured code, like well-formatted AI responses, greatly enhances readability and maintainability. This principle, while applied to code in the linked article, is analogous to structuring AI outputs for better usability and automation. By mastering the art of output formatting, you transform the AI from a simple text generator into a powerful tool for generating structured, actionable data.

7 Tips for Effective Prompt Engineering

| Tip Title | 🔄 Implementation Complexity | 💡 Resource Requirements | 📊 Expected Outcomes | ⚡ Ideal Use Cases | ⭐ Key Advantages |

|---|---|---|---|---|---|

| Be Specific and Clear in Your Instructions | Medium - requires upfront planning and detail | Moderate - needs domain knowledge and clarity | High accuracy and consistent output | General prompting across various tasks | Reduces ambiguity, saves time, consistent quality |

| Use Role-Based Prompting | Medium - needs role definition and alignment | Moderate - understanding of roles/expertise | Professional, authoritative, targeted outputs | Expert advice, domain-specific tasks | Activates domain knowledge, consistent voice |

| Implement Few-Shot Learning Examples | High - requires selecting and formatting examples | High - time and effort to prepare good examples | Very consistent and pattern-following results | Complex or nuanced formatting and creative tasks | Improves consistency, reduces detailed instructions |

| Break Complex Tasks into Steps (Chain-of-Thought) | High - requires designing stepwise instructions | Moderate - more tokens and processing time | Higher accuracy on complex/multi-step problems | Complex reasoning, problem-solving, analysis | Improves accuracy and transparency in reasoning |

| Set Context and Constraints | Medium - requires deep understanding of context | Moderate - domain/context knowledge needed | More relevant and targeted responses | Customized audience-specific or sensitive tasks | Ensures appropriateness and relevance |

| Use Iterative Refinement | High - ongoing process with tracking and testing | High - needs time, feedback, and documentation | Significantly improved long-term prompt quality | Continuous improvement and optimization | Adaptable, reduces failure risk, builds knowledge |

| Leverage Output Formatting and Structure | Medium - requires specifying formats/templates | Moderate - understanding output requirements | Usable, readable, consistent structured outputs | Data processing, reporting, automated workflows | Saves post-processing effort, enables automation |

Mastering the Art of Prompt Engineering

This article has explored several key prompt engineering tips that can significantly enhance your interactions with AI models. We've covered the importance of clear and specific instructions, leveraging role-based prompting and few-shot learning, breaking down complex tasks into smaller steps (chain-of-thought prompting), setting appropriate context and constraints, using iterative refinement, and leveraging output formatting and structure. Mastering these techniques is paramount to unlocking the true potential of AI and transforming your workflows, whether you're generating creative content, conducting complex analysis, or automating routine tasks. These prompt engineering tips empower you to communicate effectively with AI, enabling more precise and relevant results.

If you're looking for ways to enhance your prompt development process and push the boundaries of what's possible with AI, remember that cultivating creativity is crucial. For more inspiration and techniques to enhance your creative process, especially when developing prompts, check out this resource on sparking creativity: spark creativity and generate innovative ideas.

By embracing these prompt engineering strategies, you can significantly boost your productivity and achieve remarkable outcomes. Effective prompting isn't just about giving instructions; it's about guiding the AI towards insightful and impactful results. Ready to streamline your AI workflow even further? TreeSnap can prepare your codebase for seamless LLM integration by providing a GPT-ready bundle in seconds, allowing you to focus on crafting powerful and effective prompts.